Anthropic just made the AI healthcare race a lot more interesting. The company announced Claude for Healthcare this week, a specialized version of its AI assistant that can sync with health data from phones, smartwatches, and medical platforms to provide personalized health insights. The product launches just days after OpenAI revealed ChatGPT Health, making clear that both leading AI companies see medicine as the next major frontier for their technology.

The timing isn’t coincidental. Healthcare represents one of the few domains where AI capabilities might translate directly into massive revenue. Americans spend $4.5 trillion annually on healthcare, and anything that makes diagnosis faster, research easier, or administrative work less burdensome could capture significant portions of that spending. Both Anthropic and OpenAI are racing to establish positions before healthcare systems make lasting technology choices.

What makes Claude for Healthcare different from previous medical AI offerings is the depth of integration it offers. Rather than just answering health questions based on general knowledge, the system can access specific databases, research literature, and user health data to provide personalized analysis. The connectors Anthropic has built include the CMS Coverage Database, ICD-10 diagnostic codes, the National Provider Identifier Standard, and PubMed’s research repository.

What Claude for Healthcare Actually Does

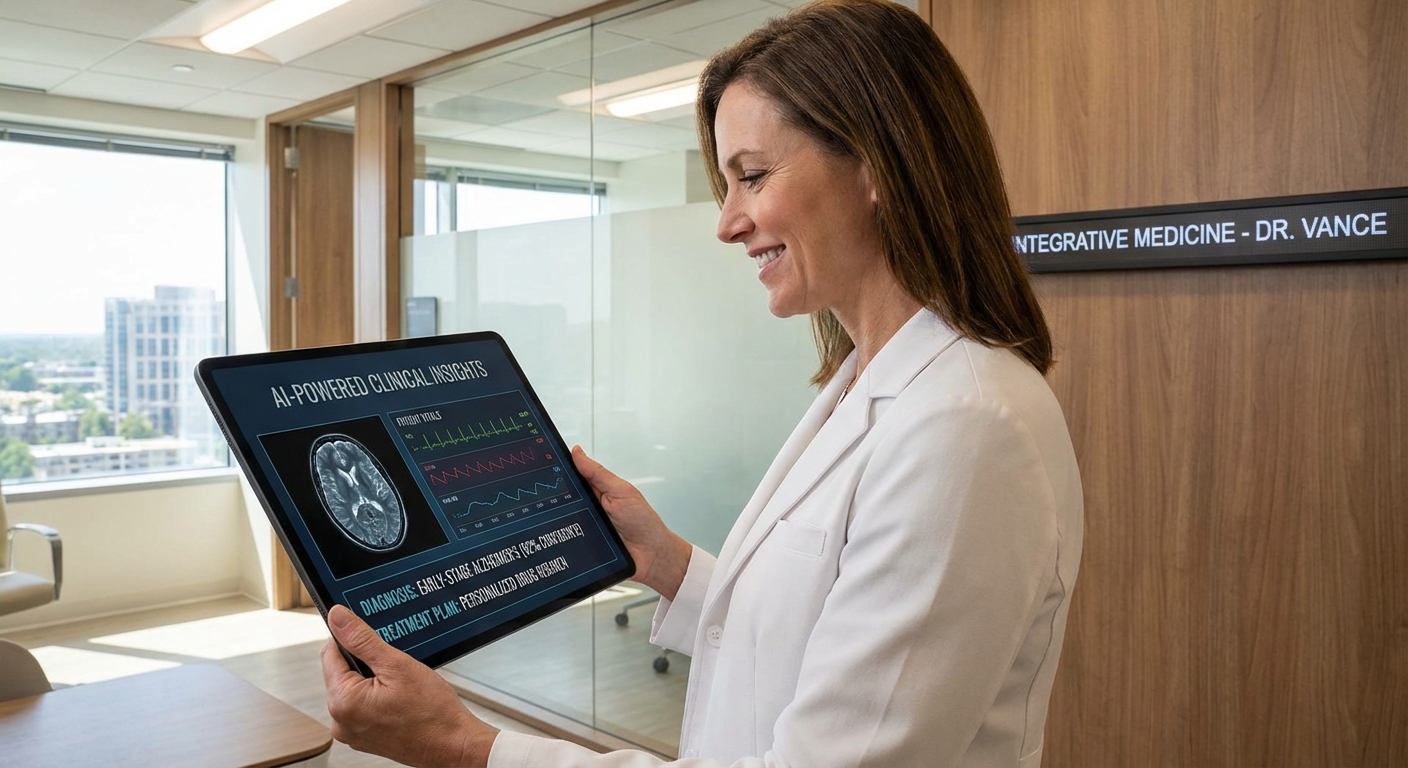

The system operates on two levels: consumer-facing health assistance and professional-grade research tools. For regular users, Claude can analyze data from Apple Health, Google Fit, and various wearables to identify patterns, flag anomalies, and suggest when professional consultation might be warranted. It can explain test results, research conditions, and help users prepare questions for doctor visits.

For healthcare professionals, the capabilities extend much further. Claude can search medical literature, synthesize research findings, generate clinical documentation, and assist with complex diagnostic reasoning. The system has been trained on medical texts and validated against clinical benchmarks, though Anthropic is careful to note it’s intended to assist rather than replace physician judgment.

The database connectors deserve attention because they address a fundamental limitation of previous medical AI: lack of access to authoritative information sources. When Claude can query PubMed directly, it provides answers grounded in actual research rather than training data that might be outdated. When it can check ICD-10 codes, it uses the same classification system physicians use, reducing translation errors.

Privacy considerations are significant. Anthropic states that user health data is processed under healthcare privacy standards and not used to train AI models. Users must explicitly consent to data access, and they can revoke that access at any time. Whether these protections prove adequate remains to be seen, but the company has clearly anticipated concerns that prevented earlier AI health products from gaining adoption.

The Competition With OpenAI

OpenAI announced ChatGPT Health just days before Anthropic’s reveal, suggesting both companies were racing toward similar conclusions about market opportunity. The OpenAI product appears more focused on consumer chat experiences, helping users understand symptoms and navigate healthcare systems. Claude for Healthcare aims at both consumer and professional use cases, with particular emphasis on research applications.

The strategic differences reflect the companies’ broader positioning. OpenAI has focused on scale and mass-market adoption, making ChatGPT the default AI assistant for hundreds of millions of users. Anthropic has positioned Claude as more thoughtful and careful, appealing to enterprise customers and professional users who need reliability more than flash.

In healthcare specifically, the reliability emphasis matters enormously. Medical AI that’s wrong even occasionally can cause harm, making the accuracy-focused positioning potentially more appropriate for this domain. Anthropic’s Daniela Amodei has described the company’s approach as doing “more with less,” prioritizing capability per dollar of compute rather than simply scaling to maximum size.

Both companies face the same fundamental challenge: getting healthcare systems to actually adopt their products. Hospitals and clinics operate under regulatory constraints, liability concerns, and workflow requirements that make new technology adoption slow and difficult. Having a great product matters less than having the sales infrastructure and integration support to actually deploy it.

The Broader AI Healthcare Landscape

Claude for Healthcare and ChatGPT Health arrive in a market already populated by specialized medical AI tools. Companies like Nuance (owned by Microsoft) have automated clinical documentation for years. Diagnostic AI from firms like Tempus and PathAI is being integrated into radiology and pathology workflows. The question is whether general-purpose AI assistants can compete with purpose-built tools or whether they serve different needs.

The generalist approach has advantages in flexibility. A doctor who uses Claude might ask about treatment options, have it summarize recent research, help draft a patient communication, and analyze lab trends, all in the same conversation. Purpose-built tools do one thing well but require switching between multiple systems. The convenience of integration could drive adoption even if individual capabilities are somewhat less specialized.

Regulatory considerations will shape how quickly these products can be deployed for clinical use. AI that assists with diagnosis or treatment recommendations may require FDA clearance, a lengthy process that has slowed other medical AI products. Anthropic and OpenAI are positioning their tools as assistants to physicians rather than autonomous diagnostic systems, potentially avoiding the strictest regulatory requirements.

The liability question remains unresolved. If a physician relies on AI-generated analysis and that analysis proves wrong, who bears responsibility? Medical malpractice frameworks weren’t designed for AI-assisted care, and courts haven’t established clear precedents. Healthcare systems are cautious about adopting technology that might create new liability exposure, regardless of how useful it seems.

What This Means for You

If you’re a regular person curious about AI health assistants, Claude for Healthcare represents a meaningful upgrade over what was previously available. The ability to sync actual health data rather than just describe symptoms provides more personalized and useful responses. The database connectors mean answers are grounded in authoritative sources rather than potentially outdated training data.

The most practical near-term use cases involve research and preparation rather than diagnosis. Using Claude to understand a condition you’ve been diagnosed with, research treatment options before discussing them with your doctor, or interpret test results makes sense. Using it to decide whether symptoms require medical attention carries more risk, though it might be better than the alternative of WebMD-induced panic.

For healthcare professionals, the tools could meaningfully reduce the administrative burden that makes medicine frustrating. Documentation alone consumes hours of physician time that could go toward patient care. Research synthesis that currently requires reading dozens of papers could happen in minutes. The productivity gains are substantial if the accuracy holds up under real-world use.

The Bottom Line

Anthropic’s Claude for Healthcare launch marks an escalation in the AI healthcare race that will reshape how both consumers and professionals interact with medical information. The integration with health data platforms and authoritative medical databases addresses limitations that made previous AI health tools less useful. Competition with OpenAI’s ChatGPT Health will drive rapid iteration and improvement.

The technology is genuinely capable of useful assistance, but the adoption challenges are significant. Healthcare systems move slowly, liability concerns are real, and regulatory requirements create uncertainty. The companies launching these products are betting that capability will eventually overcome institutional resistance. That bet will take years to play out.

For now, the practical advice is to explore these tools for research and preparation while maintaining healthy skepticism about AI-generated medical advice. The technology is good enough to be useful but not good enough to trust blindly. That balance will shift over time as capabilities improve and use patterns become clearer, but we’re not there yet.