DeepSeek, the Chinese AI company that rattled Silicon Valley a year ago with its remarkably efficient R1 model, is preparing to do it again. According to insiders who spoke with The Information, the Hangzhou-based startup plans to release its V4 model around mid-February, timed to coincide with Chinese New Year. The company has already published new technical papers and expanded its R1 documentation by over 60 pages, moves analysts interpret as clearing the deck for a major announcement.

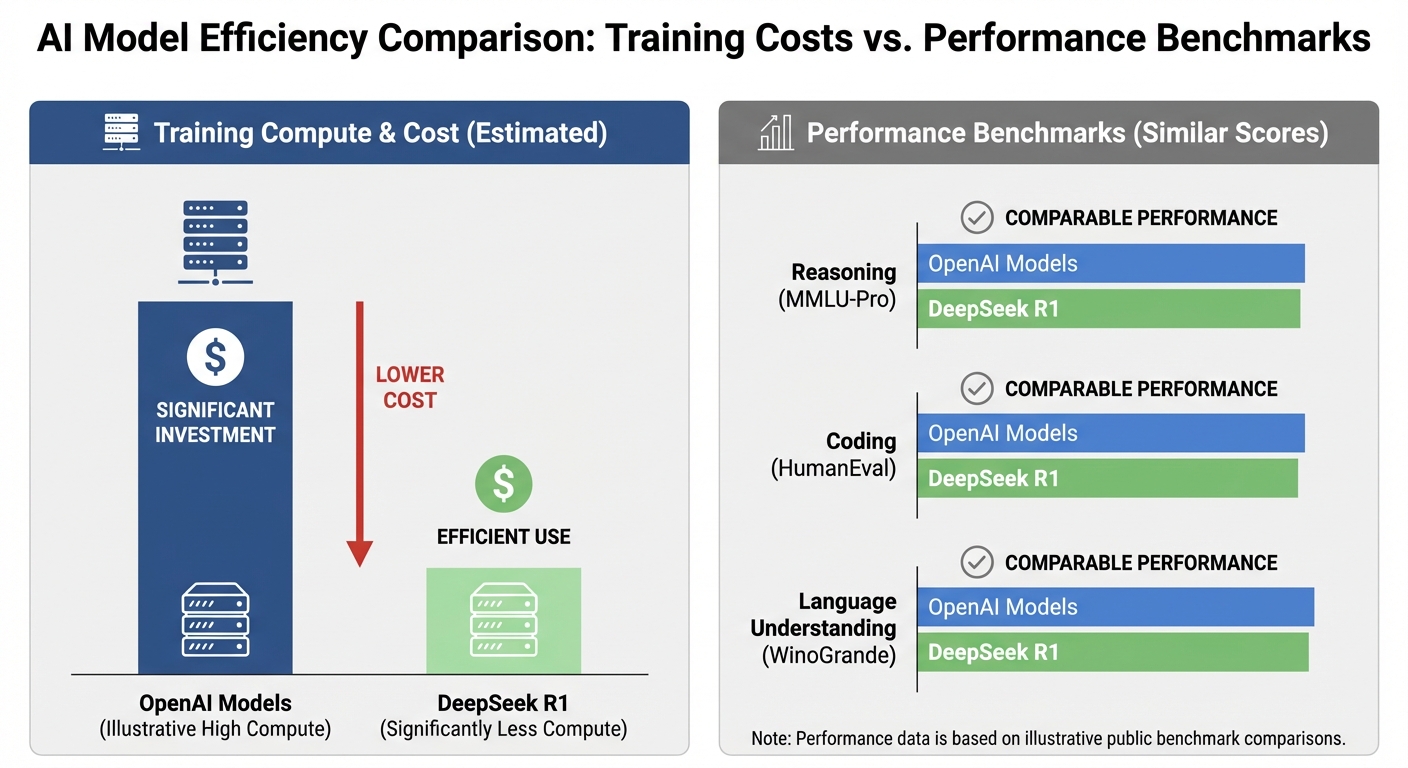

The original R1 release in January 2025 was described as a “Sputnik moment” that shook the tech industry. DeepSeek demonstrated that its model could match or exceed OpenAI’s ChatGPT o1 on key benchmarks while reportedly costing only $6 million to train, compared to estimates of $100 million or more for comparable American models. Within weeks, the app had become the most downloaded free application in dozens of countries.

V4 is expected to target coding capabilities specifically, an area where DeepSeek has shown particular strength and where American models have historically led. If the company can achieve performance parity or better in code generation while maintaining its cost advantages, the implications for the broader AI industry would be significant. Enterprise software development represents one of AI’s most commercially valuable applications.

What Made R1 So Disruptive

DeepSeek’s achievement with R1 wasn’t just about matching performance; it was about how efficiently they did it. American AI labs have operated under the assumption that frontier models require massive compute investments, both for training and inference. DeepSeek challenged that assumption by demonstrating that clever engineering could substitute for brute-force scaling.

Dimitris Papailiopoulos, principal researcher at Microsoft’s AI Frontiers research lab, noted that what surprised him most about R1 was its “engineering simplicity.” DeepSeek prioritized accurate answers over detailed reasoning traces, significantly reducing computing time while maintaining effectiveness. The approach suggested that American labs might be over-engineering their solutions.

The cost efficiency matters because it changes the economics of AI deployment. If comparable capabilities can be achieved for less money, the barriers to AI adoption drop dramatically. Companies that couldn’t justify enterprise AI contracts might reconsider. Countries that couldn’t afford to develop AI capabilities might find paths forward.

From a geopolitical perspective, DeepSeek’s success was particularly notable because it occurred despite US export controls designed to prevent China from accessing advanced AI chips. Before anticipated sanctions, DeepSeek founder Liang Wenfeng had acquired thousands of Nvidia A100 chips, now banned from export to China. The company proved it could do more with limited hardware than American labs expected.

The V4 Expectations

DeepSeek’s recently expanded technical documentation for R1 suggests the company wants to establish credibility before V4’s release. The updated whitepaper reveals the full training recipe for R1, something AI companies rarely share publicly. By demonstrating transparency about their methods, DeepSeek positions V4’s claims for greater acceptance.

The coding focus makes strategic sense. Software development is where AI currently generates the clearest return on investment for businesses. GitHub Copilot and similar tools have demonstrated that developers will pay for AI assistance that genuinely accelerates their work. A Chinese model that excels at code generation would compete directly with American products in a lucrative market.

New technical papers from DeepSeek propose fundamental rethinks of how foundational AI models are trained. Co-authored by founder Liang Wenfeng, these papers signal ambitious goals for the next generation of models. If the proposed approaches work, they could enable training larger models with less compute, extending DeepSeek’s efficiency advantages.

The timing around Chinese New Year has symbolic significance. It’s when Chinese tech companies traditionally make major announcements, benefiting from holiday media attention. A successful V4 launch would provide DeepSeek with momentum heading into what promises to be a competitive year in AI development.

Silicon Valley’s Response

American AI labs are taking the Chinese competition seriously after underestimating R1’s potential impact. OpenAI released its first open-source model in August 2025, a direct response to competitive pressure from Chinese firms that had been more willing to share their work. The Model Context Protocol that Anthropic developed is being adopted broadly, partly to accelerate American AI development.

The revenue race has intensified alongside the capability race. OpenAI is reportedly targeting $30 billion in revenue for 2026, with internal documents suggesting aggressive growth expectations. Anthropic’s annual recurring revenue has reached nearly $7 billion, with 2026 targets of $15 billion. Both companies are betting that commercial success will fund continued research advantages.

Anthropic’s Daniela Amodei has articulated a philosophy of doing “more with less” that echoes DeepSeek’s efficiency focus. She argues the next phase of AI competition won’t be won by the biggest pre-training runs alone but by who can deliver the most capability per dollar of compute. If correct, DeepSeek’s approach might prove prescient rather than a workaround for limited hardware access.

The American response also includes policy dimensions. Export controls have tightened further, attempting to restrict China’s access to not just cutting-edge chips but also older hardware that might enable efficient training approaches. Whether these restrictions can maintain American advantages or simply motivate Chinese innovation remains debated among technology policy experts.

What This Means for the AI Industry

DeepSeek’s trajectory suggests the AI industry’s geographic concentration in America and a few allied countries may be less secure than assumed. If efficiency improvements can substitute for hardware advantages, export controls become less effective tools for maintaining technological leads. The competitive dynamics could shift more quickly than current policy frameworks anticipate.

For businesses adopting AI, Chinese competition could accelerate price declines and capability improvements. The rivalry between OpenAI, Anthropic, and DeepSeek creates pressure to deliver better products at lower prices. Enterprise customers benefit from this competition even if they prefer American providers for security or policy reasons.

The open-source dimension adds complexity. DeepSeek has been more willing than American counterparts to share its methods and model weights. This openness accelerates global AI development, potentially undermining attempts to maintain concentrated advantages. It also creates opportunities for researchers and companies worldwide who couldn’t otherwise access frontier capabilities.

The Bottom Line

DeepSeek’s preparation for V4 represents the next phase of a competition that has already reshaped assumptions about AI development. The company demonstrated with R1 that Chinese AI can compete at the frontier despite hardware restrictions. V4 aims to extend that demonstration into coding, one of AI’s most commercially valuable applications.

American labs are responding with their own efficiency improvements and open-source releases, acknowledging that the competitive landscape has changed. The assumption that massive compute requirements would naturally concentrate AI leadership in wealthy countries with unrestricted chip access has been challenged, if not refuted.

Whether DeepSeek can sustain its momentum depends on factors including V4’s actual performance, continued access to necessary hardware, and the Chinese regulatory environment for AI development. The company has surprised observers before. Expecting it to do so again seems reasonable, even if the specific capabilities V4 delivers remain uncertain until February’s reveal.