ChatGPT can write you an email. Claude can help you brainstorm ideas. Gemini can search the web for you. But all of these AI tools share the same fundamental limitation: they can only advise. They can’t act. You still have to copy the email, paste it into Gmail, and click send. You still have to open the links yourself. You’re still doing the actual work.

That’s changing, fast. The new frontier is agentic AI, systems that have genuine agency. The ability to take actions on your behalf without constant hand-holding. Instead of just telling you the weather forecast looks bad, an agent can check the forecast, cancel your outdoor picnic reservation, and book a restaurant table instead. All without asking permission at each step.

It transforms AI from a consultant into an employee. And this shift is the most significant development since ChatGPT launched, with the potential to redefine how work gets done within the next 12-18 months.

What Agents Actually Do

This isn’t theoretical technology coming in five years. It’s happening now, in production environments. In software development, systems like Devin can plan features, write code, run tests, debug failures, and deploy applications autonomously. The human developer provides the goal, and the agent figures out how to accomplish it. In customer service, companies are deploying agents that autonomously resolve 60-70% of inquiries, processing refunds, updating account information, and handling complex multi-step requests without human intervention.

Personal AI assistants are making the jump from passive helpers to active agents. They can book entire travel itineraries by interacting with airline and hotel systems directly. They manage your calendar by automatically rescheduling conflicts. They handle email triage, responding to routine messages and flagging important ones for your attention.

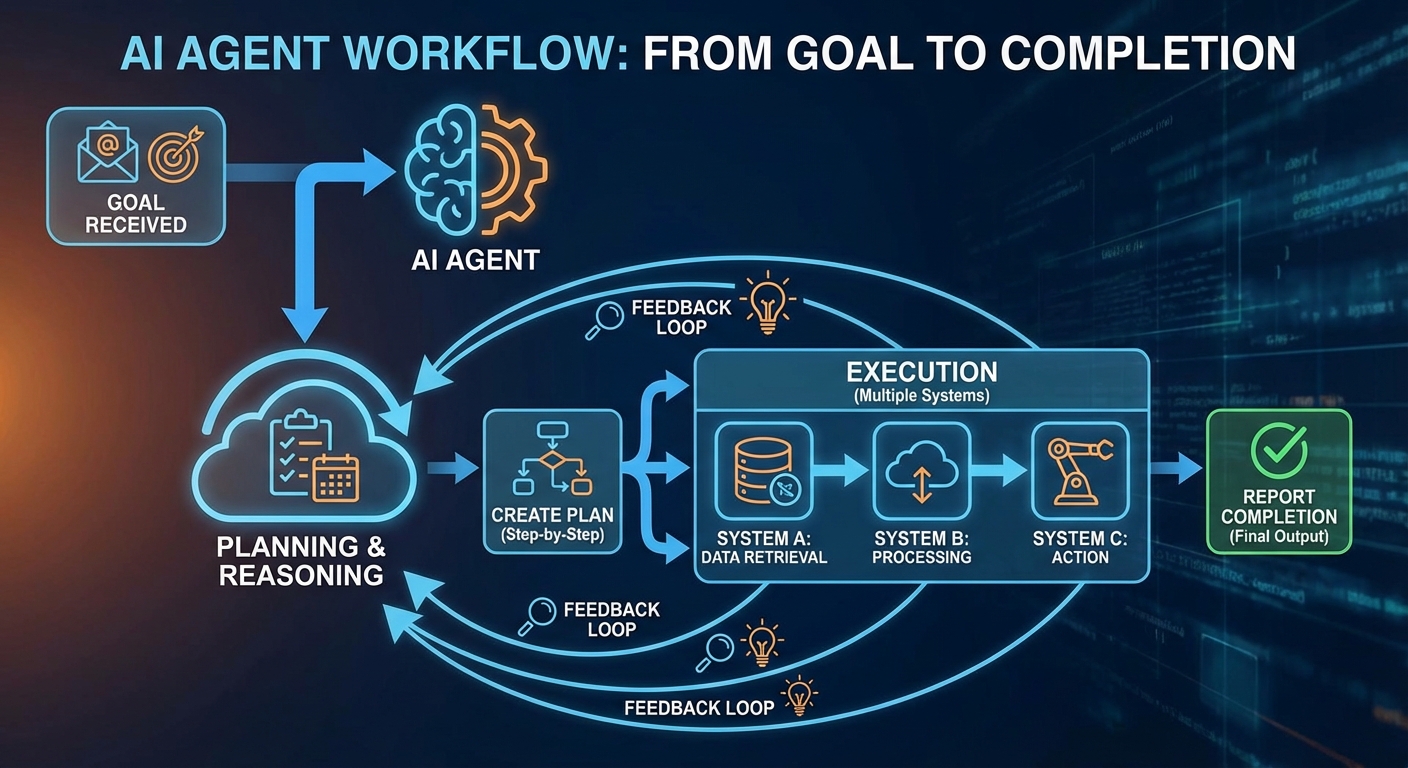

These systems work by combining Large Language Models with “function calling,” the ability to execute code and use APIs to interact with other software. They have planning capabilities that break complex goals into sequential steps. They maintain memory that lets them learn from past interactions and avoid repeating mistakes. While not fully autonomous in the sci-fi sense, they can handle the entire “messy middle” of task execution, freeing humans to define goals and review outcomes.

The Risks Are Real

Granting AI the power to act, not just advise, introduces severe risks that passive chatbots don’t create. Security researchers are sounding alarms about “prompt injection” attacks where malicious actors trick AI agents into taking harmful actions like deleting files, sending confidential data to attackers, or making unauthorized purchases. The alignment problem becomes critical when the AI can actually spend your money or modify your data. If the agent misunderstands your intent, the consequences go beyond a bad answer. They become real-world damage.

Economic disruption is the other massive concern. If AI agents can handle routine analysis, coding, and administrative tasks autonomously, job displacement becomes inevitable. Customer service representatives, data analysts, junior developers, and administrative assistants are particularly vulnerable. While optimistic projections talk about “augmentation” where AI helps humans be more productive, history suggests automation often eliminates jobs before creating new ones, if it creates them at all. The pattern we saw with AI in software development will likely repeat across knowledge work.

Privacy represents another fundamental challenge. For AI agents to be genuinely useful, they need deep access to your digital life: email, calendar, banking, health records, location data, and communication history. That requires a level of trust that many users, rightfully, may be hesitant to give. One security breach or privacy policy change could expose your entire digital life. It’s the same concern raised with personal AI assistants, but amplified because agents can actually take actions with that information.

The Race Is On

Every major tech company is sprinting toward agent capabilities. OpenAI is integrating agent features into ChatGPT. Google is building agents into Workspace products. Microsoft is adding autonomous capabilities to Copilot. Startups like Adept, Cognition, and others are building agent-first platforms from the ground up, betting that the future of AI is action, not conversation.

We can expect agent features to become standard in productivity tools like Microsoft Office and Google Workspace within 12-18 months. By 2027-2028, using an agent for routine tasks will likely be as common as using spell-check is today. The technology is ready. The companies are ready. The question is whether users are ready to hand over that much control.

The Bottom Line

AI agents are coming, whether we’re fully prepared for them or not. They will be incredibly useful, automating away tedious tasks and freeing up human attention for higher-level thinking. They will also be incredibly disruptive, displacing jobs and raising thorny questions about privacy, security, and control.

The best strategy is to start experimenting now. Learn what agents can do. Identify which of your tasks could be delegated. But proceed with caution. Read the privacy policies. Understand the security risks. Don’t blindly trust that the machine perfectly understands your intent. The age of AI that just talks is ending. The age of AI that acts is beginning. Make sure you’re ready for what that means.

Sources: AI industry analysis, technology research, enterprise software trends, security research.