In September 2024, researchers confirmed what many feared: deepfake detection tools can no longer reliably distinguish AI-generated content from reality. Accuracy rates have dropped to around 65%, barely better than a coin flip. The telltale signs that used to give away fake images and videos are gone. Weird hands, unnatural lighting, odd reflections. All fixed.

We’ve entered an era where seeing is no longer believing, and the implications reach far beyond fake celebrity videos on social media. This affects courts, banks, elections, and personal safety.

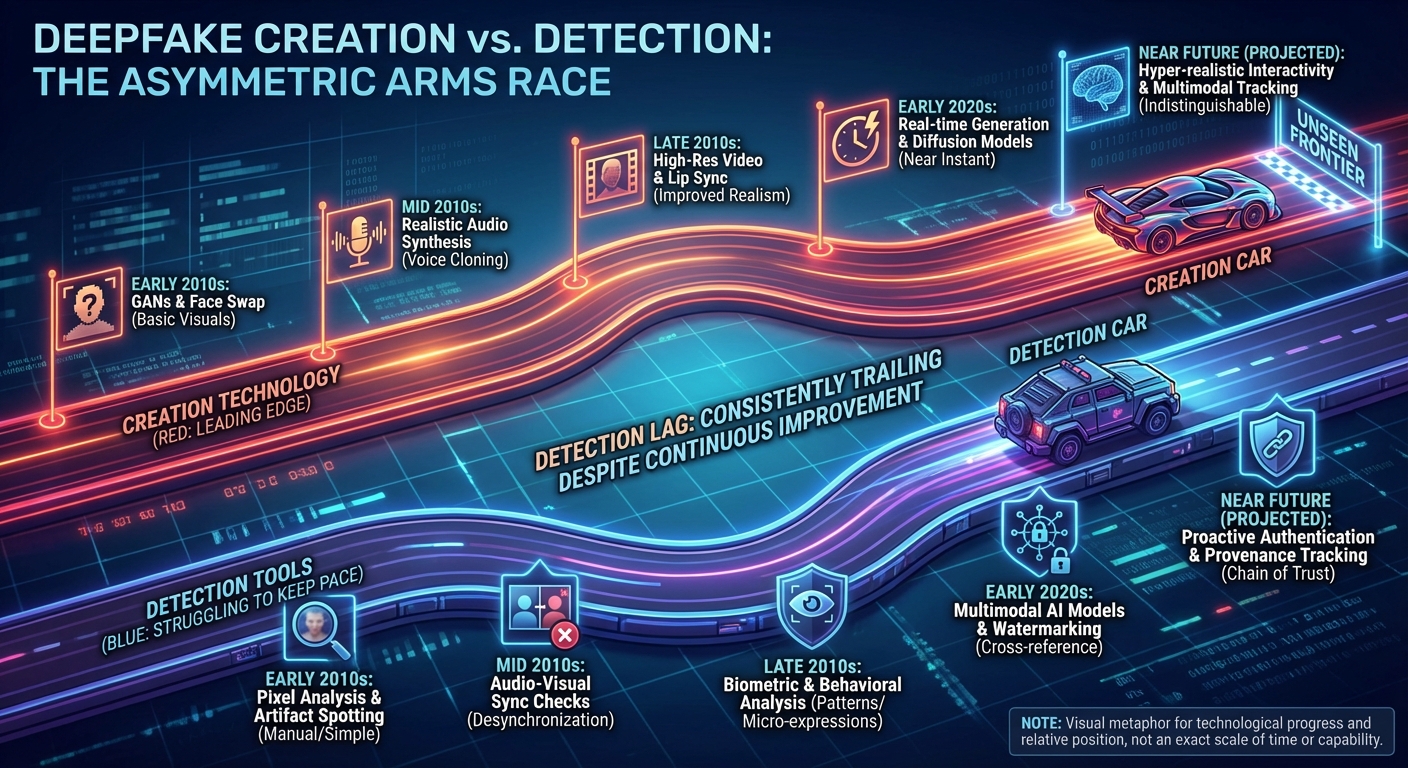

How We Lost the Arms Race

This wasn’t an accident. It was inevitable. Detection tools got better at spotting fakes, so AI developers trained their models specifically to fool those detection tools. It’s an arms race, and the offense just lapped the defense. Modern deepfakes use diffusion models and adversarial training to perfect every pixel. The AI literally practices against detection algorithms until it learns how to pass undetected.

Current generation tools can create Hollywood-quality fakes on consumer laptops. The “tells” are gone because the AI has been specifically optimized to eliminate them. Hands look natural. Lighting is physically accurate. Reflections match correctly. Blinking patterns match human baselines. Even artifacts in compression that used to reveal manipulation have been accounted for and corrected.

Real-time deepfakes are even more concerning. Systems can now impersonate voices and faces on live video calls with minimal processing lag. This breaks the fundamental assumption that real-time video communication is trustworthy. The technology that powers Zoom meetings can now be used to create convincing impersonations during those same meetings.

The Damage Is Already Happening

The theoretical concerns have become practical problems. Banks are seeing sophisticated fraud where “customers” pass video verification calls, only for the real account holder to report unauthorized access later. The deepfake impersonated them convincingly enough to fool security protocols designed to prevent exactly this kind of fraud.

Remote hiring fraud has emerged as a significant issue. Some remote jobs are being successfully obtained by AI avatars that conduct interviews, pass background checks, and even perform basic work tasks. The company thinks they hired a person. They hired an AI puppet.

In legal contexts, we’re seeing the “liar’s dividend” take effect. This is the phenomenon where any real video evidence can now be dismissed as potentially fake, creating reasonable doubt where none should exist. If a politician is caught on video saying something damaging, they can claim it’s a deepfake. Even if it’s real, the mere possibility creates enough uncertainty to undermine the evidence. Truth becomes unfalsifiable, which is almost as dangerous as lies becoming undetectable.

Why Detection Failed

The fundamental problem is asymmetry. Creating deepfakes uses the same AI technology that’s advancing rapidly across all of machine learning. Detecting them requires finding patterns and artifacts that creators are specifically training their models to eliminate. As long as creators can test their fakes against detection tools and iterate, detection will always be playing catch-up.

The detection industry is now pivoting strategies. Instead of trying to analyze pixels to determine if something is fake, which is increasingly impossible, they’re focusing on “provenance.” This means cryptographically signing real content at the moment of creation. If your camera digitally signs a photo with a verifiable signature proving it came from that specific camera at that specific time, the burden of proof shifts. Unsigned content becomes suspect by default.

But this requires hardware changes in every camera and widespread adoption of new standards. Camera manufacturers, phone makers, and software platforms would all need to implement compatible signing systems. That’s a multi-year rollout at best, and it only works for new content going forward. It does nothing about the existing ocean of media that has no provenance verification. Similar challenges exist for AI technology across industries, where regulation struggles to keep pace with advancement.

How to Protect Yourself Now

For individuals, the immediate threat is impersonation fraud targeting your family and financial accounts. Establish “safe words” or verification questions with family members that you can use to confirm identity on phone or video calls. If someone claiming to be a loved one asks for money or sensitive information, verify through a separate channel before complying.

Don’t trust video or audio verification alone for high-stakes decisions. If your bank calls asking for verification, hang up and call them back using a number from their official website, not the number that called you. If you receive a video message from someone asking for help or money, verify through text message or in-person contact before responding.

Be skeptical of sensational video content, especially if it’s politically charged or comes from unknown sources. Check multiple reputable news sources before sharing or believing viral videos. The rule of thumb is: if it seems designed to make you angry or shocked, it’s worth extra verification before accepting as real.

For businesses, update security protocols to assume that video and voice can be faked. Multi-factor authentication should include channels that are harder to fake simultaneously. For high-value transactions, require multiple verification methods across different communication channels.

The Societal Implications

Beyond individual fraud, we’re facing a crisis of epistemic trust. If video evidence can be convincingly faked, and real video can be plausibly denied as fake, we lose a fundamental tool for establishing shared reality. Courts rely on video evidence. Journalism relies on documented proof. Democracy relies on voters having access to verifiable information about candidates.

The “liar’s dividend” is particularly corrosive. Politicians caught in genuine scandals can now claim any damning evidence is AI-generated. Corporations can dismiss whistleblower videos as fabrications. Anyone confronted with inconvenient truth has a ready-made excuse. Even when the evidence is real, the possibility of deepfakes provides cover.

We’re not ready for this. Legal systems haven’t adapted. Verification standards haven’t been established. Most people still assume that seeing a video of someone saying or doing something is roughly equivalent to proof. That assumption is now dangerous.

The Bottom Line

The technology for creating deepfakes is advancing faster than the technology for detecting them, and there’s no indication that gap will close. There is no software patch that fixes this problem. The only long-term solution is provenance systems that verify authenticity at creation, but those are years away from widespread implementation.

In the meantime, we have to fundamentally upgrade our skepticism. Video and audio are no longer reliable proof of anything on their own. We need multiple sources, verification through separate channels, and a much higher bar for what we accept as evidence. The era of “seeing is believing” is over. The era of “trust but verify everything, multiple ways” is here. Whether we’re ready for it or not. For broader context on how technology is outpacing our ability to regulate it, see the antitrust battles trying to catch up with Big Tech’s power.

Sources: MIT Media Lab deepfake research, cybersecurity fraud reports, Content Authenticity Initiative.