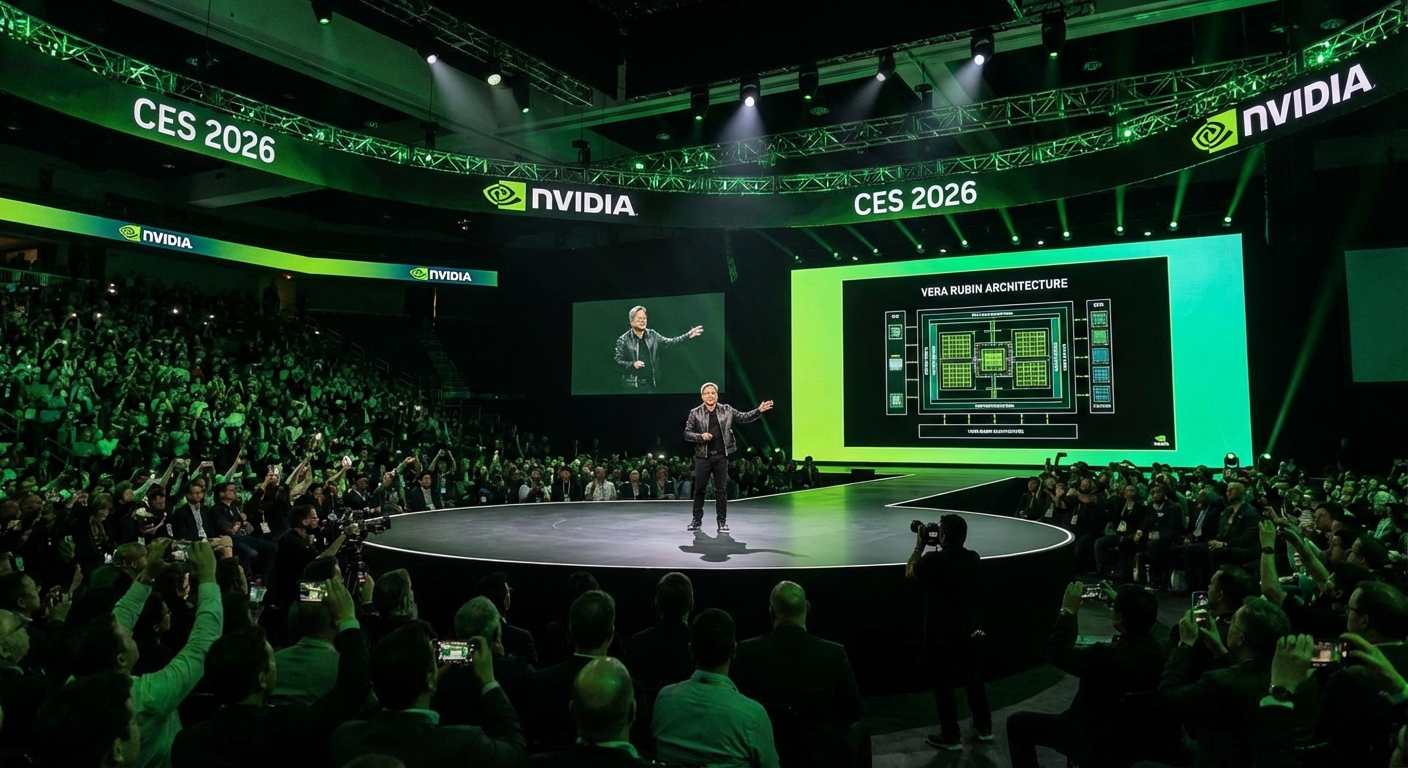

Jensen Huang took the stage at CES 2026 on Monday night and did what he does best: make every other tech company feel like they’re playing catch-up. In a 90-minute presentation that ranged from next-generation AI chips to autonomous Star Wars droids, the NVIDIA CEO unveiled Vera Rubin, the company’s most ambitious computing platform ever, and signaled that the AI revolution is about to get a lot more physical.

The announcements came fast and dense, covering everything from a new chip architecture promising 10x lower inference costs to open-source AI models across six scientific domains. For the $4.6 trillion company that has become synonymous with artificial intelligence, CES 2026 wasn’t just a product launch. It was a blueprint for the next decade of computing. Here’s everything you need to know.

Vera Rubin: The Next Generation of AI Supercomputing

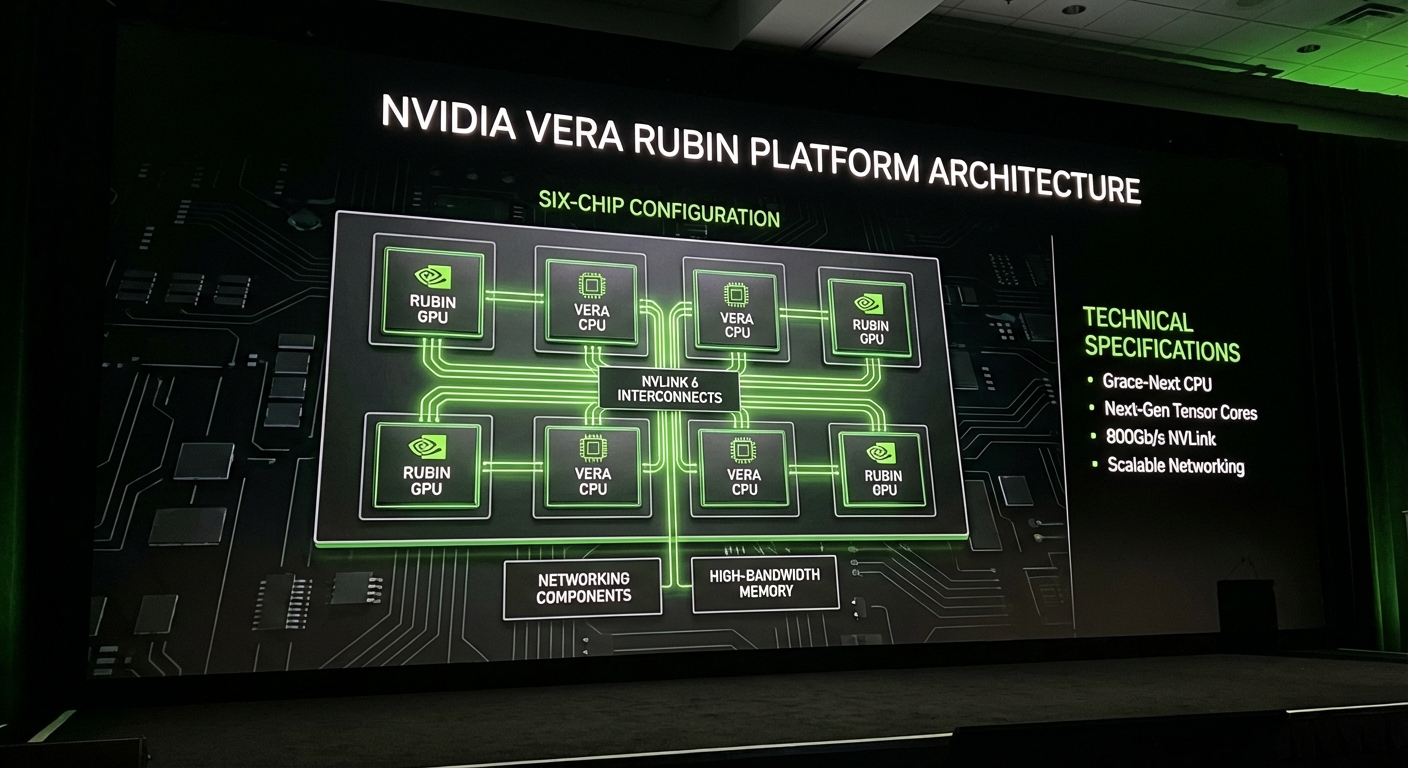

The headline announcement was Vera Rubin, NVIDIA’s first “extreme-codesigned” six-chip AI platform. Named after the astronomer whose work proved the existence of dark matter, the platform represents NVIDIA’s answer to a fundamental problem: AI computation demands are growing faster than traditional chip improvements can deliver.

Vera Rubin is now in full production, with broader availability expected in the second half of 2026. The numbers are staggering. Each Vera Rubin NVL72 supercomputer delivers 50 petaflops of NVFP4 inference capability, promising up to 5x greater inference performance than the current Blackwell generation. More importantly for the companies paying NVIDIA’s bills, the platform offers approximately one-tenth the cost per token of previous platforms.

The architecture combines Rubin GPUs featuring 336 billion transistors with Vera CPUs packing 88 custom Olympus cores and 1.5TB of system memory. The total transistor count per Vera CPU reaches 227 billion. The system includes NVLink 6 for chip interconnection, Spectrum-X Ethernet Photonics for networking, ConnectX-9 SuperNICs, and BlueField-4 DPUs for data processing.

Huang emphasized that everything in Vera Rubin is encrypted by default, addressing growing concerns about AI security and data privacy. In a quirky engineering detail, the system is cooled by hot water at 45 degrees Celsius, a counterintuitive approach that actually improves energy efficiency compared to traditional air cooling.

“Vera Rubin is designed to address this fundamental challenge that we have: The amount of computation necessary for AI is skyrocketing,” Huang told the audience. The platform represents NVIDIA’s bet that AI infrastructure needs don’t just grow, they compound, and that only purpose-built systems can keep pace.

Alpamayo: AI That Actually Drives

If Vera Rubin represents NVIDIA’s hardware future, Alpamayo represents its software ambitions for the physical world. The family of open-source reasoning models is designed specifically for autonomous vehicles, and it approaches driving fundamentally differently than previous systems.

The centerpiece is Alpamayo R1, which NVIDIA calls the “first open, reasoning VLA model for autonomous driving.” The 10-billion parameter system uses chain-of-thought reasoning to break down unexpected driving situations into smaller problems before finding the safest path forward. Instead of pure pattern matching, Alpamayo reasons through scenarios more like a human driver would.

The practical implications arrived faster than anyone expected. Huang announced that Mercedes-Benz will bring AI-defined driving to the United States this year in the all-new CLA, built on NVIDIA’s DRIVE Hyperion platform with Alpamayo technology. The partnership signals that supervised autonomous driving is moving from concept to consumer reality faster than regulatory frameworks can keep up.

NVIDIA also released AlpaSim, a fully open simulation blueprint for testing autonomous systems. The combination of open reasoning models and open simulation tools suggests NVIDIA wants to become the default platform for anyone developing self-driving technology, from legacy automakers to robotaxi startups. Lucid, Nuro, and Uber have already unveiled a production-ready robotaxi platform built on NVIDIA hardware.

Open Models Across Six Scientific Domains

Perhaps the most surprising element of the keynote was NVIDIA’s aggressive push into open-source AI models. The company released models trained on its own supercomputers across six domains, each designed to accelerate breakthroughs in fields beyond typical AI applications.

Clara targets healthcare applications, from medical imaging to drug discovery. Earth-2 focuses on climate science, enabling more accurate weather prediction and climate modeling. Nemotron handles reasoning and multimodal AI, the kind of general-purpose intelligence that powers chatbots and assistants. Cosmos generates realistic videos and synthesizes scenarios for robotics and simulation. GR00T powers embodied intelligence for humanoid robots. And Alpamayo, as discussed, handles autonomous driving.

The open-source strategy serves multiple purposes. It establishes NVIDIA hardware as the default training platform for cutting-edge models, creates ecosystem lock-in among researchers and developers, and positions the company as a contributor to scientific progress rather than just a chip vendor. When researchers use NVIDIA’s open models, they typically use NVIDIA’s hardware to run them.

Physical AI: Robots Take Center Stage

The phrase “physical AI” appeared repeatedly throughout the keynote, signaling NVIDIA’s strategic pivot from powering chatbots to powering robots. The company released a comprehensive stack of robot foundation models, simulation tools, and edge hardware designed to become the default platform for generalist robotics.

The highlight came when BDX droids from Star Wars appeared on stage, entirely autonomous and trained on NVIDIA Cosmos. The theatrical moment underscored a serious point: robotics is moving from specialized industrial applications to general-purpose systems that can operate in unpredictable environments.

NVIDIA announced expanded partnerships with Boston Dynamics, Franka, Siemens, Synopsis, and Cadence to build out the physical AI ecosystem. The Siemens partnership particularly stood out, showing how NVIDIA’s full stack integrates with industrial software from design and simulation through production. For manufacturers, this promises AI-powered factories where digital twins and physical systems work seamlessly together.

The robotics push connects directly to NVIDIA’s hardware business. Robots need edge computing for real-time decision making, cloud computing for training and updates, and simulation environments for safe development. NVIDIA sells solutions across all three, making robotics potentially more lucrative than the data center AI that currently dominates revenue.

Gaming Gets an Upgrade Too

Lest anyone forget NVIDIA’s origins, the company also announced significant gaming updates in a separate GeForce On briefing following the main keynote. DLSS 4.5 introduces Dynamic Multi Frame Generation with a new 6X mode, trained on a second-generation transformer model that should reduce the ghosting and shimmering artifacts that plagued earlier versions.

More than 250 games now support DLSS 4 technology, and the ecosystem continues expanding. G-Sync Pulsar monitors, featuring perceived motion clarity equivalent to 1,000Hz displays, will open for pre-orders on January 7. GeForce NOW cloud gaming is expanding to Linux PC and Amazon Fire TV, bringing NVIDIA’s gaming capabilities to platforms where installing a physical GPU isn’t practical.

These gaming announcements won’t move NVIDIA’s stock price, but they maintain the enthusiast community that made the company what it is. When gamers evangelize NVIDIA, they create future AI customers, engineers, and investors. The brand value of GeForce extends far beyond gaming revenue.

What It All Means

NVIDIA’s CES 2026 keynote painted a picture of a company that sees AI expanding from data centers into every aspect of the physical world. Cars will drive themselves using NVIDIA chips and models. Factories will optimize themselves using NVIDIA simulations. Robots will navigate unpredictable environments using NVIDIA-trained intelligence. And scientists will accelerate discoveries using NVIDIA’s open research tools.

The Vera Rubin platform ensures NVIDIA maintains its hardware advantage as AI computation demands explode. The open model strategy creates ecosystem lock-in among developers and researchers. The physical AI push opens new markets beyond the hyperscaler data centers that currently drive most revenue. And the gaming updates keep the enthusiast community engaged.

For competitors like AMD, which presented its own CES keynote just hours earlier, the message was clear: NVIDIA isn’t just defending its AI lead, it’s expanding into new territories before anyone else arrives. The company that started making graphics cards for video games is now positioning itself as the platform for intelligent machines of all kinds.

Whether that vision fully materializes depends on factors beyond NVIDIA’s control: regulatory frameworks for autonomous vehicles, adoption curves for industrial robotics, and the fundamental question of whether AI can deliver on its transformative promises. But after CES 2026, no one doubts that NVIDIA is betting everything on that future arriving.