Political campaigns are using AI to write fundraising emails that raise millions, create micro-targeted ads that swing voters, and help craft policy positions based on what resonates with specific demographics. Voters have no idea it’s happening.

The 2024 election was the first where AI tools deployed at scale. Both parties used them for everything from copywriting to opposition research. AI-written fundraising emails outperform human ones by 30-50%. AI-targeted ads reach voters with eerie precision. The campaigns using these tools most effectively are winning, creating an arms race reshaping American democracy in real time.

How Campaigns Use AI

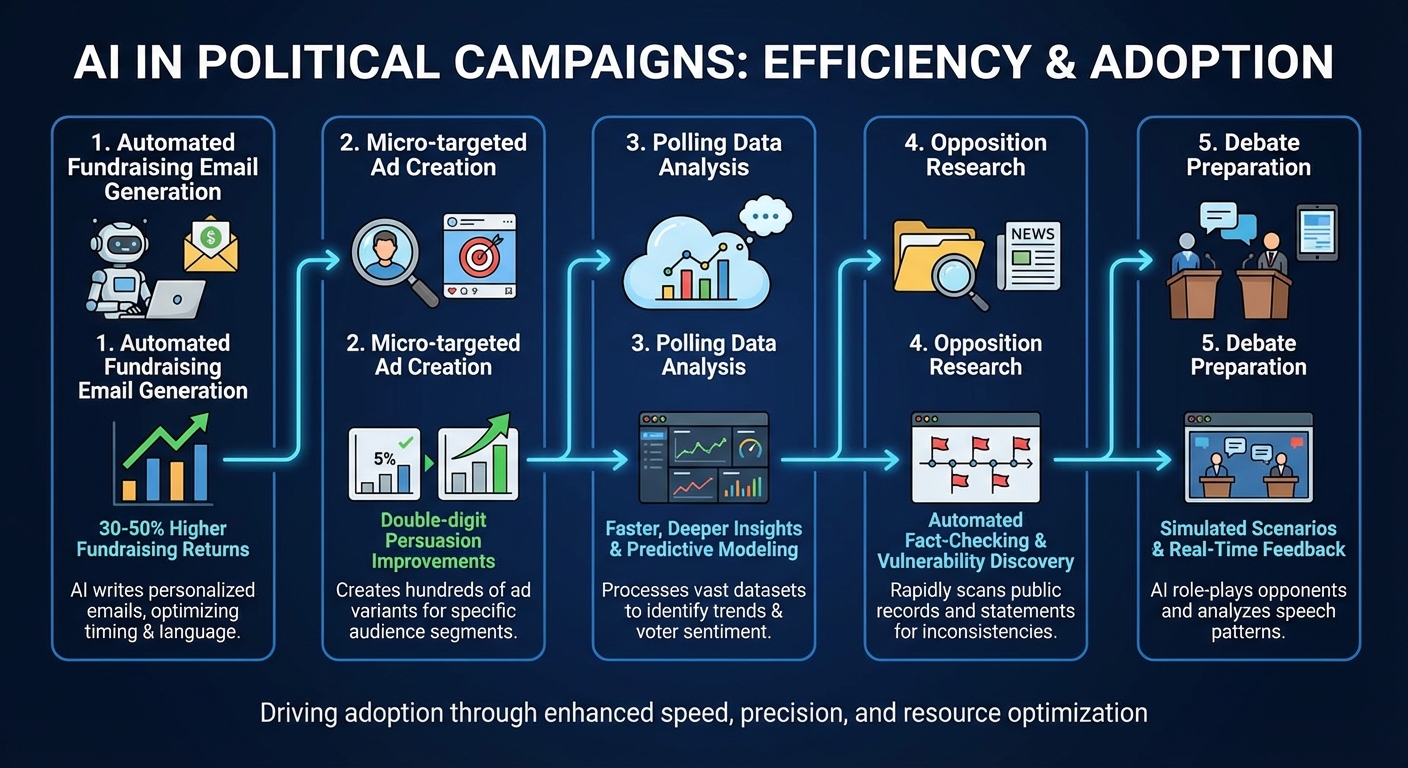

The applications are extensive. AI generates thousands of ad variations targeting specific voter segments. Suburban moms see one message, rural veterans see another, all optimized by algorithms analyzing response data. It scans years of opponent speeches to find contradictions in seconds, a task that used to take research teams weeks. It analyzes polling data to suggest strategy pivots faster than any human consultant.

Debate prep has evolved too. AI simulators now role-play opponents, predicting attacks and testing responses. This isn’t future tech. It’s standard operating procedure for any competitive campaign today.

The Effectiveness Problem

The results are undeniable. Campaigns report 50% higher fundraising returns and double-digit improvements in voter persuasion. But there’s a transparency gap. Voters usually can’t tell when they’re being messaged by a machine. That “personal” email from a candidate? AI-written. That ad speaking perfectly to your concerns? Algorithm-generated based on your digital profile.

This lack of disclosure raises serious questions. We’re being persuaded at scale with messages tailored to our psychological profiles, without knowing who or what wrote them. The manipulation isn’t illegal, but it’s invisible.

The Regulation Gap

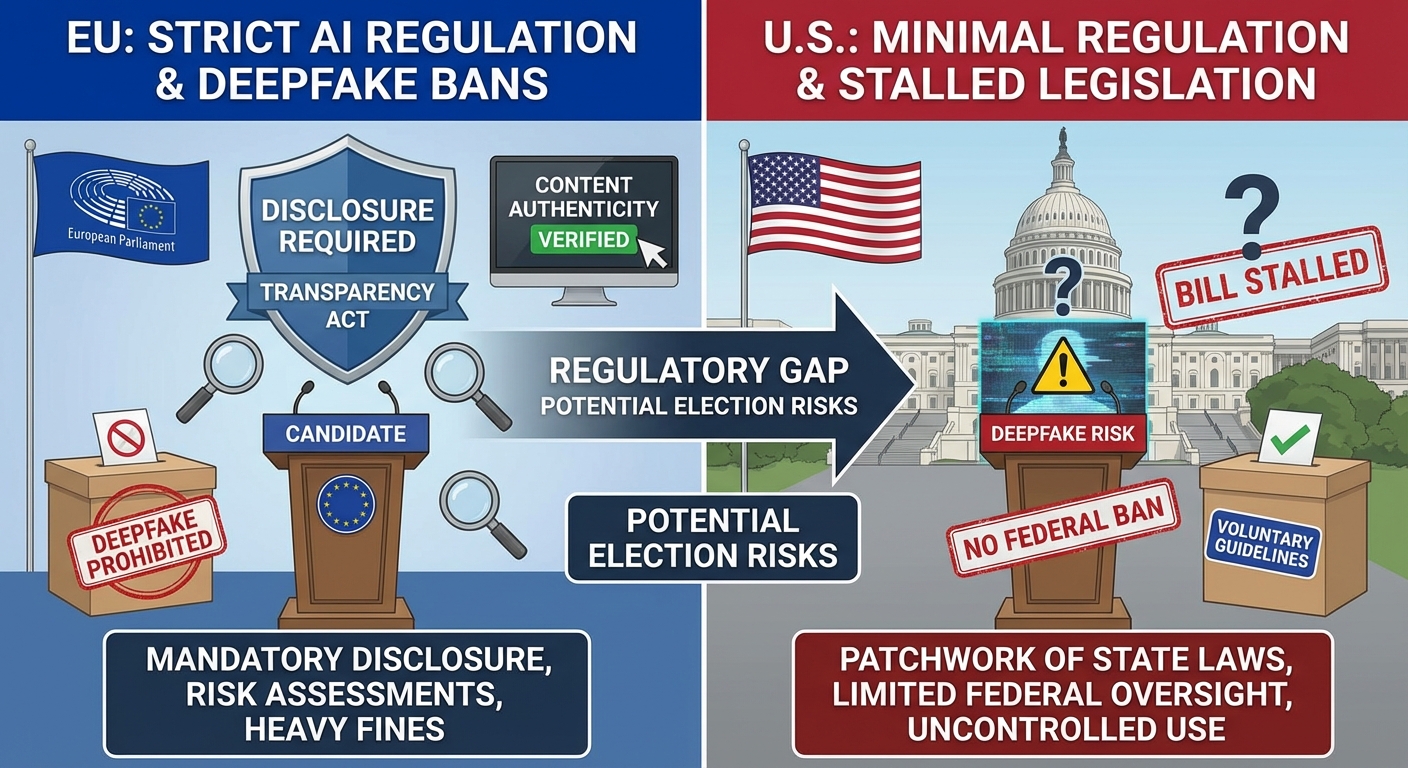

The U.S. is a wild west for political AI. Unlike the EU, which requires disclosure of AI-generated content, American laws haven’t caught up. The risks are real: deepfakes of candidates, synthetic endorsements, erosion of shared reality. Wealthy campaigns gain another advantage, buying better algorithms to dominate the information space.

Proposed regulations include mandatory disclosure requirements and bans on deepfakes, but they’re stalled in gridlocked Congress. Meanwhile, the technology advances daily, outpacing any attempt at oversight.

What’s Next

Real-time AI adaptation during debates. Synthetic volunteers making voter calls. AI avatars campaigning for candidates. These aren’t distant possibilities. Some are already being tested. Voters now need to assume every piece of political content could be machine-generated. Skepticism is the only defense.

We’re entering an era where human authenticity becomes the premium product in politics, yet it’s harder than ever to verify. The campaigns that figure out how to blend AI efficiency with genuine human connection will dominate the next decade of elections.

The Bottom Line

AI has fundamentally changed campaigning. The genie is out of the bottle. Campaigns using AI win, those that don’t lose. Whether this leads to more responsive democracy or more manipulated voters depends on regulations that don’t yet exist. For now, the machines are running the show, and most voters don’t even know they’re watching. For more on technology reshaping politics, see the TikTok ban that never happened. And for another case where tech policy can’t keep up with innovation, check out Section 230’s day in court.

Sources: Campaign strategy consultants, political technology researchers, Federal Election Commission filings.