Section 230 of the Communications Decency Act consists of 26 words that essentially built the internet as we know it: “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

In plain English, websites aren’t legally responsible for what users post. Facebook isn’t liable for defamatory posts, YouTube isn’t sued for every copyright violation, and Yelp doesn’t face bankruptcy from negative reviews. This immunity enabled the rise of social media, forums, and the participatory internet. Now the Supreme Court is hearing cases that could weaken or eliminate this protection, and the implications would be massive.

The Legal Challenge

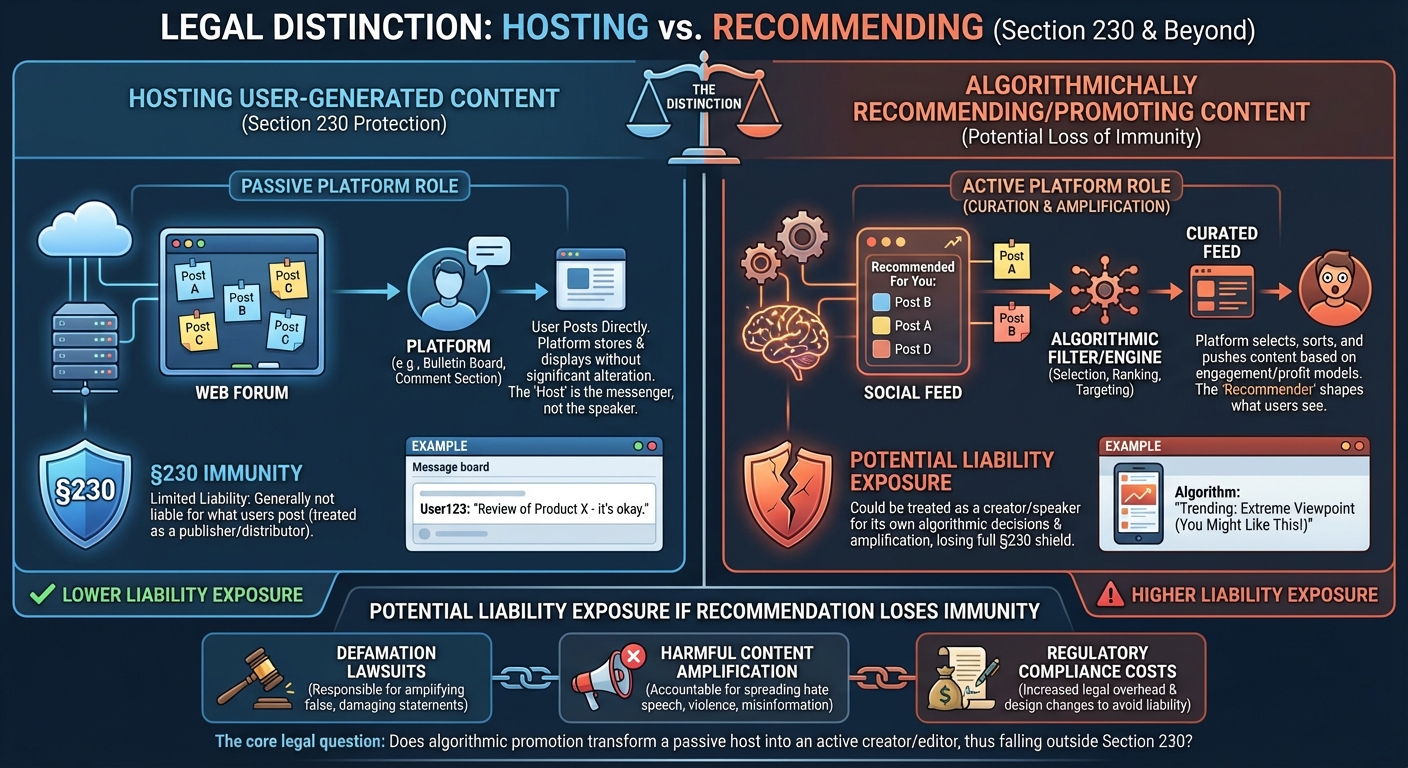

Two major cases, Gonzalez v. Google and Twitter v. Taamneh, challenge the scope of immunity. The central argument: while platforms might be immune for hosting content, they should be liable for recommending it. Plaintiffs argue YouTube’s algorithm actively promoted terrorist content, moving the platform from passive host to active publisher.

If the Court rules algorithmic recommendation is distinct from hosting and not protected by Section 230, it would break the business model of the modern internet. Every social media feed, search result, and recommendation engine relies on algorithms to sort content. Removing immunity for these functions would expose platforms to endless litigation for simply showing users what they might want to see.

What Changes If Section 230 Weakens

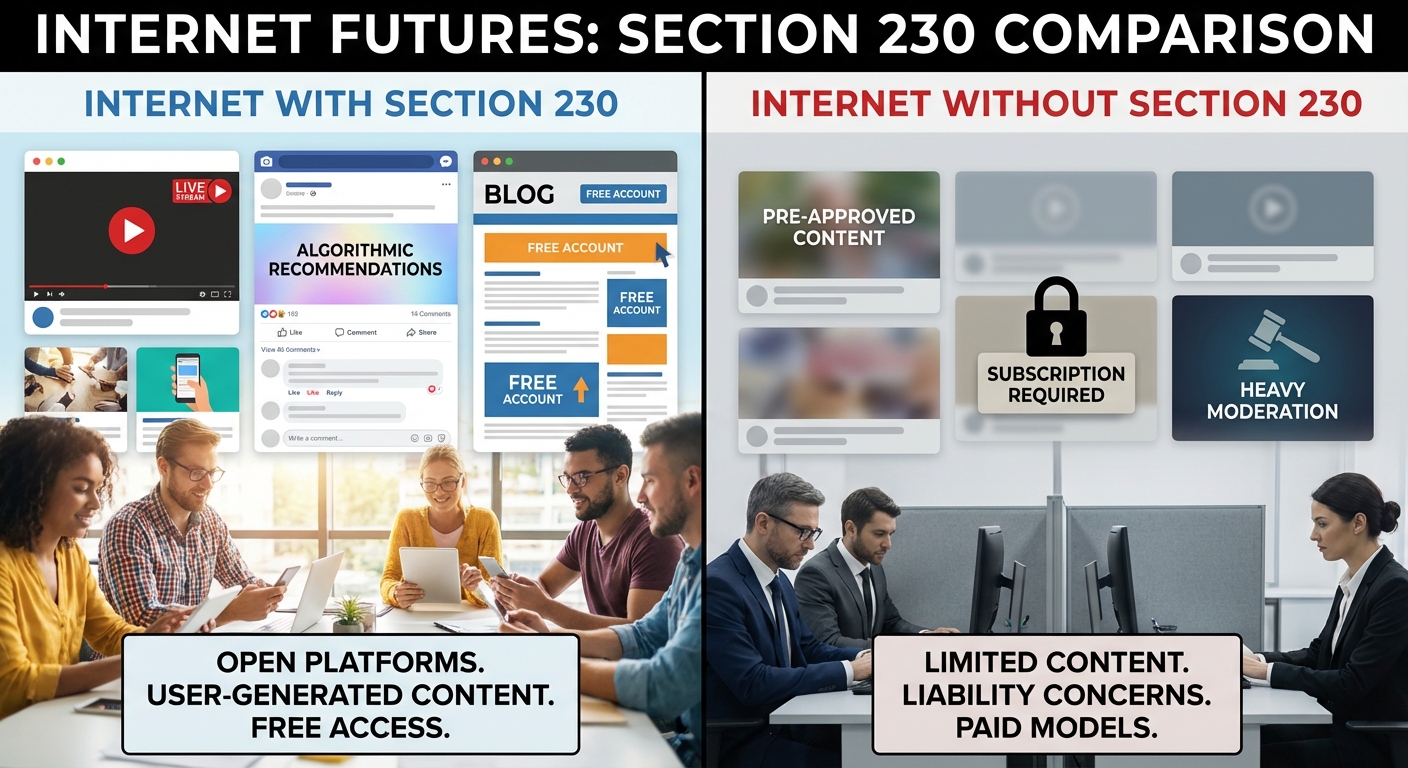

If Section 230 is significantly weakened, the internet changes overnight. To avoid liability, platforms would implement massive automated censorship. Anything controversial, political, or slightly risky would be blocked by default. The “open” internet where anyone can post would become a curated, sanitized environment where only “safe” speech is allowed.

The economic impact would be equally severe. Content moderation is expensive. Facebook employs tens of thousands of moderators. If liability increases, these costs explode, likely killing free ad-supported models in favor of paid subscriptions. Worse, small startups and competitors would be wiped out. Only giants like Google and Meta have resources to build necessary legal and moderation infrastructure, effectively entrenching their monopoly.

The Debate

Critics argue Section 230 is outdated, shielding trillion-dollar companies from accountability for real harms like harassment, radicalization, and dangerous misinformation. They say platforms shouldn’t profit from amplifying hate speech without consequence.

Supporters counter that Section 230 is the only thing preserving free speech online. Removing it would force platforms to silence marginalized voices and controversial opinions to protect their bottom line. The trade-off is real: more safety might mean less freedom, more accountability might mean less innovation.

The Bottom Line

Section 230 faces genuine danger for the first time in 25 years. What the Supreme Court decides will determine whether the internet remains a place for open discourse or becomes a restricted, corporate-controlled garden. The trade-offs are real and the stakes are high. This isn’t theoretical policy debate. It’s about the fundamental architecture of how we communicate online. For more on how tech regulation is struggling to keep up with innovation, see the TikTok ban that never happened. And for another example of AI outpacing policy, check out how AI is reshaping political campaigns.

Sources: Supreme Court filings, Section 230 legal analysis, technology policy research.