Sam Altman cooked garlic on camera. That’s how we knew GPT-5.2 was coming.

The OpenAI CEO posted a video of himself preparing a garlic-heavy dish on December 10, a not-so-subtle hint about the company’s latest model, internally codenamed “Garlic.” Twenty-four hours later, OpenAI officially launched GPT-5.2, its most advanced AI model yet, on the exact same day Google unveiled its Gemini Deep Research agent. The timing wasn’t coincidental. This is what an AI arms race looks like in 2025, and the stakes have never been higher.

For months, OpenAI has been watching Google gain ground. Google’s Gemini app now boasts 650 million monthly active users, while AI Overviews reaches 2 billion people monthly. Those numbers reportedly prompted Altman to issue an internal “code red” memo earlier this month, redirecting company resources to accelerate ChatGPT improvements. The GPT-5.2 launch was OpenAI’s answer, and based on the benchmarks, it’s a formidable one.

What GPT-5.2 Actually Brings to the Table

Let’s cut through the marketing language. GPT-5.2 represents a genuine leap in capability, not just incremental improvement.

The model features a massive 400,000-token context window, meaning it can process hundreds of documents or large code repositories in a single conversation. That’s roughly 300,000 words, or about five full-length novels, all at once. The 128,000-token output limit lets it generate extensive reports or complete applications without the truncation issues that plagued earlier models.

OpenAI structured GPT-5.2 into three tiers: Instant optimizes for speed on routine queries like writing and translation, Thinking handles complex structured work including coding, math, and planning, and Pro delivers maximum accuracy for the most difficult problems. This tiered approach lets users match compute resources to task complexity, something enterprise customers have been requesting for years.

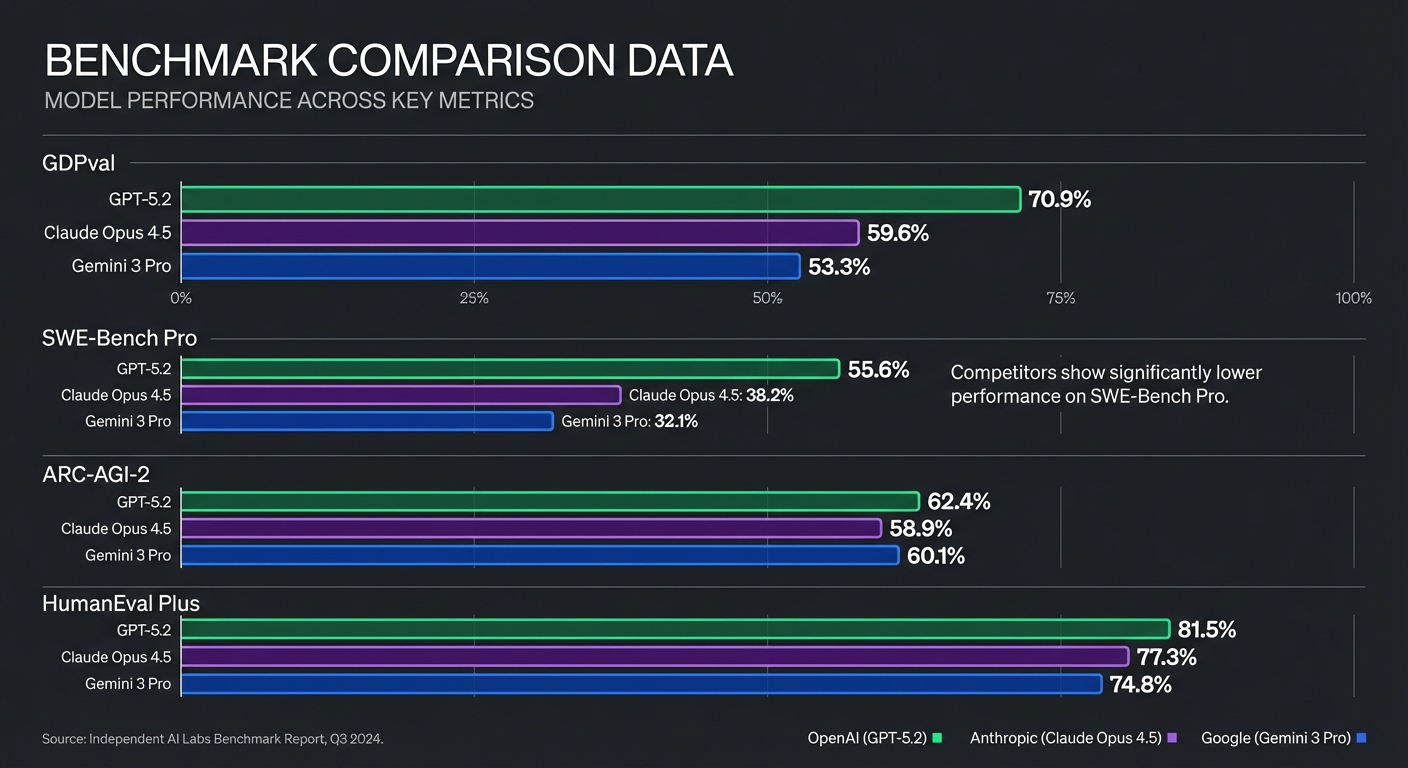

The benchmark numbers tell a compelling story. On OpenAI’s GDPval benchmark, GPT-5.2 met or exceeded human expert performance 70.9% of the time, according to OpenAI’s official announcement. That’s compared to 59.6% for Anthropic’s Claude Opus 4.5 and just 53.3% for Google’s Gemini 3 Pro. On SWE-Bench Pro, a software development benchmark, GPT-5.2 scored 55.6%, outperforming Gemini 3 Pro by more than 12 percentage points. Perhaps most impressively, on ARC-AGI-2, a benchmark designed to test genuine reasoning ability, GPT-5.2’s Pro variant scored 54.2%, significantly ahead of Claude Opus 4.5 at 37.6%.

Google’s Simultaneous Counter-Move

Google didn’t just sit back and watch. On the exact same day, the company launched Gemini Deep Research, its most sophisticated research agent to date.

Deep Research represents Google’s bet that the future of AI lies in agentic systems that can autonomously conduct complex research tasks. Rather than simply answering questions, it can break down research problems, search across multiple sources, synthesize findings, and present comprehensive reports. According to TechCrunch’s coverage, Gemini Deep Research achieved state-of-the-art 46.4% on the full Humanity’s Last Exam benchmark, 66.1% on DeepSearchQA, and 59.2% on BrowseComp.

The simultaneous launches weren’t accidental. Both companies clearly knew what the other was planning and chose to compete head-to-head rather than yield the news cycle. It’s a level of direct competition that recalls the browser wars of the late 1990s, but with far higher stakes and faster iteration cycles.

The “Code Red” That Started It All

Understanding this moment requires understanding the panic that preceded it.

Earlier this month, CNBC reported that Altman circulated an internal memo declaring a “code red” at OpenAI. The term deliberately echoed Google’s own code red from three years ago, when ChatGPT’s launch caught the search giant off guard, sparking the race for Chief AI Officers across corporate America. Now the tables had turned. Google’s aggressive rollout of Gemini features across its product ecosystem, combined with Anthropic’s steady gains in enterprise adoption, had put OpenAI on the defensive.

The memo reportedly directed teams to prioritize ChatGPT improvements over other projects. “We need to be moving faster,” one internal source told reporters. OpenAI’s valuation has swelled to $500 billion, and the company has announced infrastructure investments totaling $1.4 trillion over the coming years. That kind of capital requires maintaining technological leadership, not just keeping pace.

For its part, OpenAI quickly moved to highlight its enterprise success. According to VentureBeat, ChatGPT message volume has grown 8x since November 2024, with enterprise workers reporting they save up to an hour daily using the platform. These aren’t just vanity metrics. They represent the revenue streams that justify OpenAI’s massive infrastructure investments.

Regulatory Clouds on the Horizon

The competition isn’t happening in a vacuum. Just days before the dueling launches, a coalition of state attorneys general sent letters to OpenAI, Google, Microsoft, and ten other major AI companies, warning them to address what they called “delusional outputs” or face potential legal action under state consumer protection laws.

The letters, reported by TechCrunch, came after a string of disturbing incidents involving AI chatbots and mental health. The attorneys general demanded new internal safeguards, putting pressure on companies racing to release increasingly powerful models.

Meanwhile, President Trump signed an executive order limiting states’ ability to regulate AI, setting up a potential federal-state clash over technology governance. The regulatory landscape remains fragmented and uncertain, even as the technology accelerates.

What Happens Next

The AI industry is also trying to prevent future fragmentation. OpenAI, Anthropic, and Block recently joined forces under the Linux Foundation to create the Agentic AI Foundation, aimed at standardizing infrastructure so AI agents from different companies can communicate with each other. It’s an acknowledgment that unchecked competition could create compatibility nightmares reminiscent of the early mobile operating system wars.

Looking ahead, The Information has identified a broader architectural shift within OpenAI under the “Garlic” codename, potentially arriving as GPT-5.5 in early 2026. This project reportedly represents a breakthrough in pretraining efficiency, creating smaller models that retain the knowledge of much larger systems while reducing computational costs.

The Bottom Line

The AI war between OpenAI and Google has entered a new phase. Gone are the days when releases were spaced months apart, giving the industry time to absorb each advancement. Now we’re seeing same-day competitive launches, internal “code red” memos, and trillion-dollar infrastructure commitments.

For users and businesses, this competition means faster improvements and more powerful tools. For the companies involved, it means an exhausting sprint with no finish line in sight. And for regulators, it means trying to keep pace with technology that evolves faster than any previous innovation cycle.

One thing is clear: the garlic is just the appetizer. The main course is still being prepared.

Sources: OpenAI, TechCrunch, CNBC, VentureBeat, The Information.